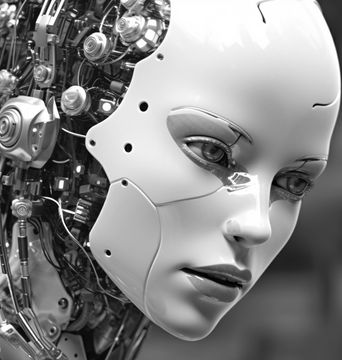

STUDY: PEOPLE MORE LIKELY TO THINK AI-GENERATED WHITE FACES ARE REAL THAN ACTUAL HUMANS, COULD LEAD TO DIGITAL IMPOSTORS!

As humankind's relationship with Artificial Intelligence (AI) continues to evolve, it’s becoming increasingly vital to understand not only how these systems can impact us but also how our own biases might be woven into the very fabric of these AI creations. A recent study highlights this importance, revealing how people are more likely to view AI-generated white faces as more human than photographs of actual individuals. Consequences range from potential identity theft threats to deepening social biases and disparities.

The comprehensive study, conducted by research teams based in Australia, UK, and the Netherlands, revealed unsettling implications. AI-generated white faces were perceived as more human-like than real photos. This fascinating yet terrifying revelation eases the path for digital impostors. The intersection of this tendency and steadily progressing technology could provide fertile ground for identity theft, as people’s abilities to distinguish between artificial and real-life visages blur alarmingly.

However, it’s important to note that this unexpected phenomenon remains confined to white faces, a circumstance likely tied to the AI being predominantly trained on images of white individuals. This caveat highlights a bias not just in our perception, but also within the programming structures of AI, a repercussion of the data predominantly used to train the technology. A racially skewed pool of data is ultimately influencing society’s ability to trust and perceive images.

This inherent discrepancy moves beyond just the heightened risk of identity theft, extending its grasp to areas such as online therapy and robotics. An individual seeking therapy online, for instance, could be interacting with an AI counterpart without even realizing it. Further, the bias exhibited by such AI systems could unconsciously reinforce already-existing social biases, nudging us further away from equality and cohesiveness.

While humans seem to struggle with distinguishing reality from AI-generated images, a machine learning system developed by the research team seems to have cracked the code. With a whopping 94% accuracy rate, the system outperforms human capabilities substantially, casting a critical light on the limitations of human perception in the digital age.

Dr Zak Witkower and Dr Clare Sutherland, co-authors of the study, welcomed the rise of AI but cautioned against the unaddressed biases baked into the AI systems. They stressed the need to ensure equal treatment across ethnicity, gender, age, and any other protected characteristic.

As society continues to intertwine its fabric with that of the digital sphere, it's clear that our interactions with AI are shaping more than just our perception — they're shaping our future. This research serves as a clarion call for technologists, policymakers, and society alike. As we take consequential strides amidst this AI revolution, the fight against inherent biases programmed in these systems becomes crucial. Balancing technological progression with the preservation of humanity and equality in its truest sense, is an imperative task that awaits us. Without such checks, we might sail unknowingly towards a future where AI exacerbates inequality, rather than instigating a fair, balanced, and egalitarian society.